RunPod: Affordable AI Cloud with Global GPU Deployment

RunPod offers a globally distributed GPU cloud platform tailored for AI development. Save on costs with scalable Serverless endpoints and seamless operations.

| Pricing: | Paid, $ 0.2/hour |

| Semrush rank: | 593.1k |

| Location: | , United States of America |

| Tech Used: | Next.js, Storyblok, React ; Emotion, MUI, Node.js, |

Features

- Wide Range of Environments: Choose from 50+ pre-configured environments or create your own for a customizable development workspace.

- Streamlined Training: Efficiently train your AI models with RunPod's optimized training process, saving time and resources.

- Scalable Deployment: Deploy and scale your AI applications effortlessly, with the ability to manage millions of inference requests.

- Global Interoperability: Deploy AI models in over 30 regions worldwide, ensuring fast and reliable access to your applications.

- Limitless Ultra-fast Storage: Utilize NVMe storage for quick scaling of your development, datasets, and models.

- Serverless Autoscaling: Automatically scale to hundreds of GPUs and only pay for what you use with a 99.99% uptime guarantee.

- Real-time Monitoring: Access real-time logs and metrics for seamless debugging and performance tracking of your deployments.

- Cost-effective Resource Management: Minimize expenses with pay-per-second pricing and eliminate idling GPU costs.

- Enterprise-grade Security: Benefit from world-class compliance and security standards for peace of mind.

Use Cases:

- AI Model Development: Leverage powerful GPU instances to develop and train AI models, starting with minimal setup.

- Production Scaling: Deploy and scale from zero to millions of inference requests with serverless endpoints, ideal for high-demand AI applications.

- Machine Learning Education: Utilize RunPod's accessible GPU resources for educational purposes, making advanced machine learning more approachable.

- Research and Innovation: Affordably run experiments and innovate in AI research with access to cutting-edge GPU technology.

RunPod’s scalable, secure, and cost-effective GPU cloud platform is ideal for developers and organizations looking to develop, train, and deploy AI models. With its global reach and serverless solutions, it accelerates AI application development while minimizing operational overhead.

RunPod Alternatives:

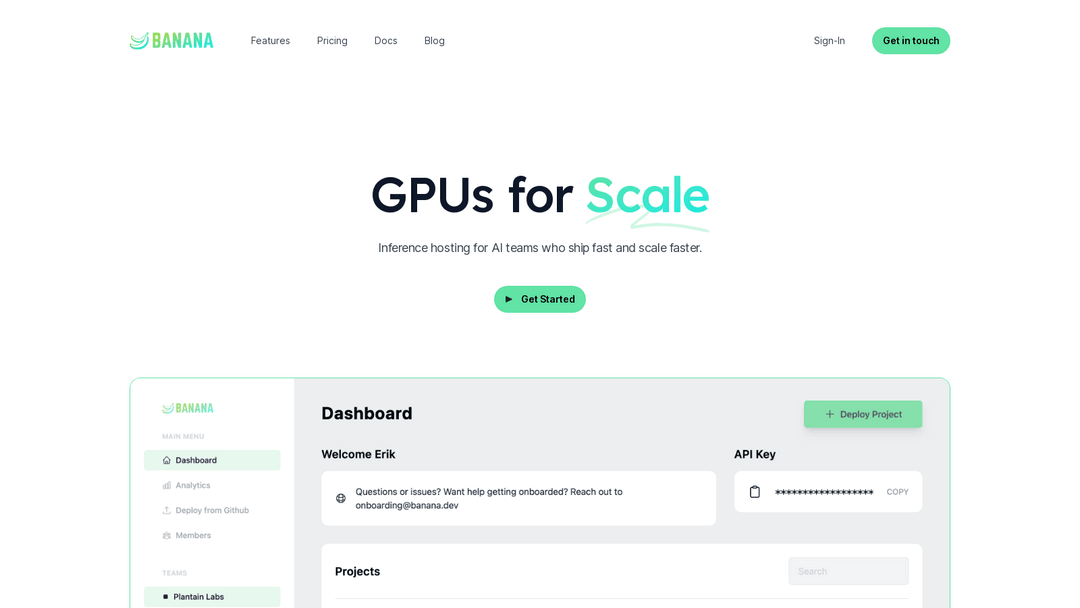

1. Banana

Scalable, cost-effective GPU hosting with autoscaling for AI deployment and management.

4. FunctionHub

FunctionHub is a platform for creating AI applications, testing and deploying serverless functions.

6. MLnative

Streamlines machine learning model deployment, management, and inference with secure efficiency.

9. Evoke

Cloud API for running and managing AI models with secure storage and deployment.